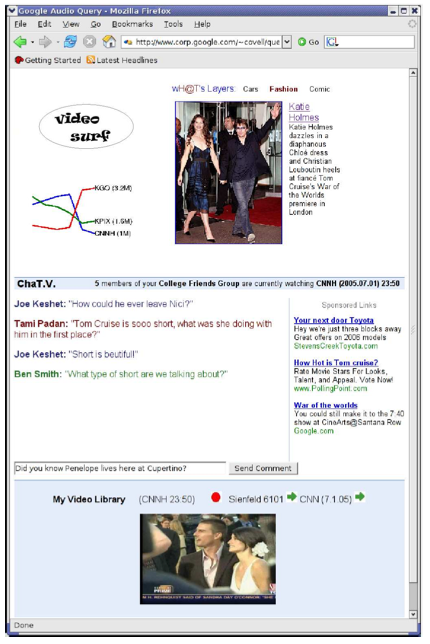

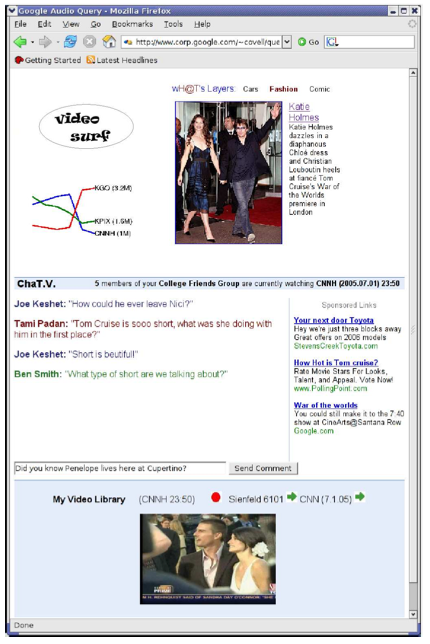

Google Research on “Social- and Interactive-Television Applications Based on Real-Time Ambient-Audio Identification”

The Google Research team at last week’s Euro ITV (the interactive television conference) won the best paper award for research just posted to the Google Research blog. Their topic? Personalized experiences synchronous with mass-media consumption. That means a system where your computer listens to the TV in your living room, compresses the sound for comparison to a Google sized audio database and then offers you services online related to whatever you are watching.

This does not appear to be functional yet, but the paper also seems to assure readers that it does not require much new technology either.

Advertising? Wasn’t discussed. The examples the Google scientists provided fell into the following four categories:

Advertising? Wasn’t discussed. The examples the Google scientists provided fell into the following four categories:

- personalized information layers

- ad hoc social peer communities

- real-time popularity ratings

- TV- based bookmarks

Of course advertising can be contextual to any of those, as is shown in the hypothetical screenshot above from the Google paper. There will also be the option of selecting Two Minutes Hate worth of advertising in exchange for access to premium content. Just kidding about that part. The rest of this is real, though.

“If friends of the viewer were watching the same episode of ‘Seinfeld’ at the same time,” the paper says, “the social- application server could automatically create an on- line ad hoc community of these ‘buddies’.”

The paper assures skeptics that the privacy will be technically ensured.

The viewer’s acoustic privacy is maintained by the irreversibility of the mapping from audio to summary statistics. Unlike the speech-enabled

proactive agent by Hong et al. (2001), our approach will not “overhear†conversations. Furthermore, no one receiving (or intercepting) these statistics is able to eavesdrop, on such conversations, since the original audio does not leave the viewer’s computer and the summary statistics are insufficient for reconstruction. Further, the system can easily be

designed to use an explicit ‘mute/un-mute’ button, to give the viewer full control of when acoustic statistics are collected for transmission.input-data rates. This is especially important since we process the raw data on the client’s machine (for privacy reasons), and would like to keep computation requirements at a minimum.

There’s no mention of localized versions for China, for example. Can the US government be trusted not to demand access to this kind of data? No. I can imagine the privacy concerns here are going to be huge. People may go for it though. I am open to the idea, but I don’t think I like it. GMail’s contextual advertising doesn’t scare me though.

This seems like a recipe for nothing but shopping and superficial interaction. I suppose I could debate with people in my “snobby snobs” group about the veracity of a History Channel show. So maybe I’m wrong.

One way or the other, this seems like a pretty viable vision of the future.

This looks great – a nice looking video player for films downloaded from Google Video,

This looks great – a nice looking video player for films downloaded from Google Video,  Advertising? Wasn’t discussed. The examples the Google scientists provided fell into the following four categories:

Advertising? Wasn’t discussed. The examples the Google scientists provided fell into the following four categories:

The

The  Software as a service, web applications, data portability – these are the sorts of themes that Microsoft is making moves in support of in the new computing world, in their own way.

Software as a service, web applications, data portability – these are the sorts of themes that Microsoft is making moves in support of in the new computing world, in their own way.